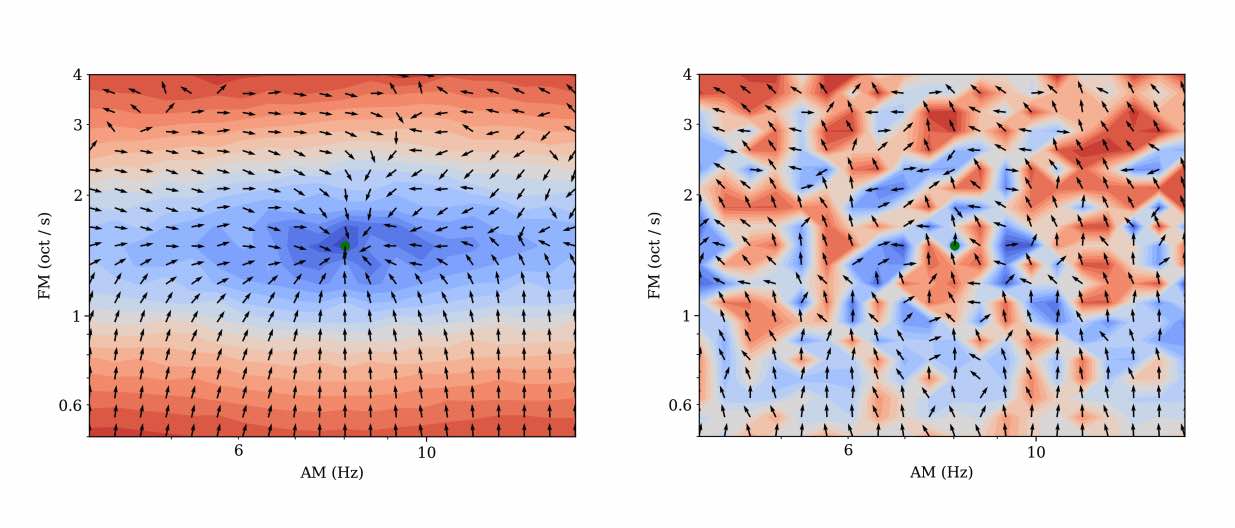

Computer musicians refer to mesostructures as the intermediate levels of articulation between the microstructure of waveshapes and the macrostructure of musical forms. Examples of mesostructures include melody, arpeggios, syncopation, polyphonic grouping, and textural contrast. Despite their central role in musical expression, they have received limited attention in recent applications of deep learning to the analysis and synthesis of musical audio. Currently, autoencoders and neural audio synthesizers are only trained and evaluated at the scale of microstructure: i.e., local amplitude variations up to 100 milliseconds or so. In this paper, we formulate and address the problem of mesostructural audio modeling via a composition of a differentiable arpeggiator and time-frequency scattering. We empirically demonstrate that time-frequency scattering serves as a differentiable model of similarity between synthesis parameters that govern mesostructure. By exposing the sensitivity of short-time spectral distances to time alignment, we motivate the need for a time-invariant and multiscale differentiable time-frequency model of similarity at the level of both local spectra and spectrotemporal modulations.

Author: Vincent Lostanlen

Fitting Auditory Filterbanks with MuReNN @ IEEE WASPAA

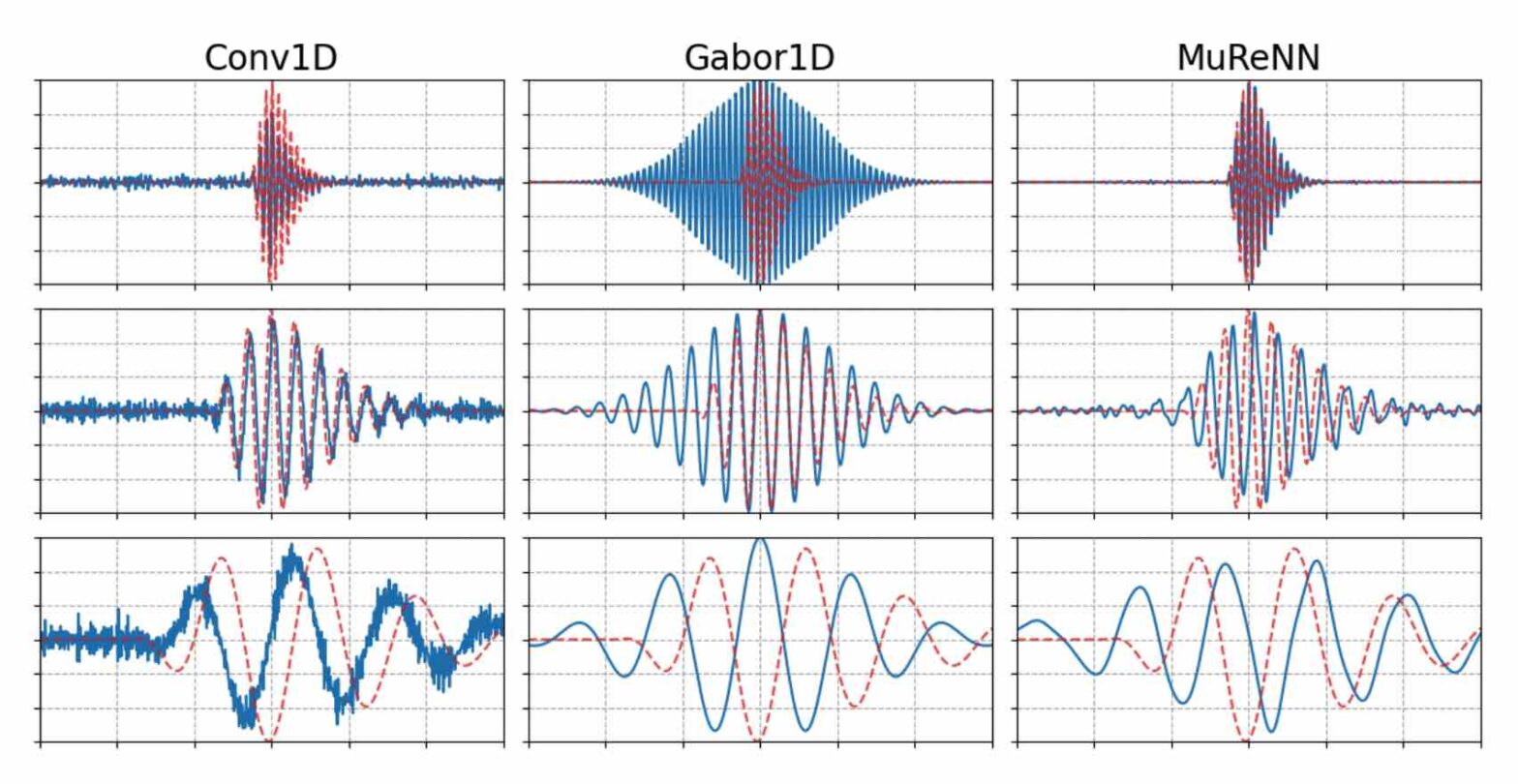

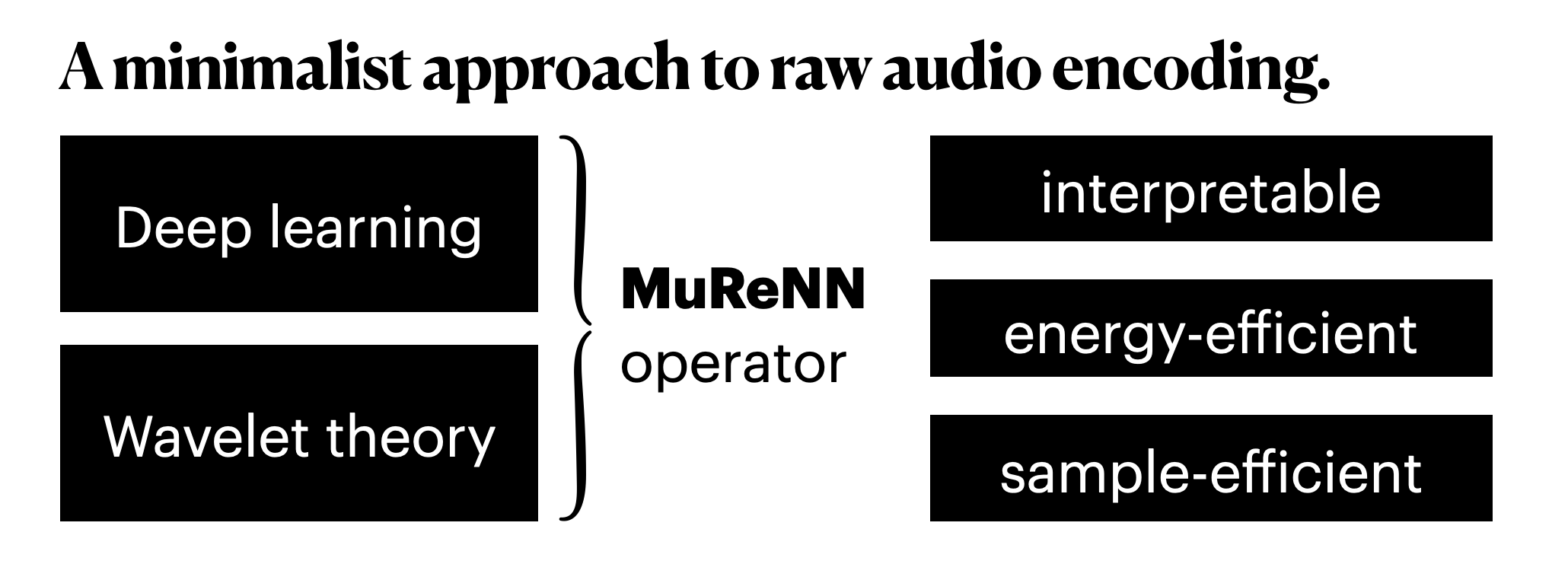

Waveform-based deep learning faces a dilemma between nonparametric and parametric approaches. On one hand, convolutional neural networks (convnets) may approximate any linear time-invariant system; yet, in practice, their frequency responses become more irregular as their receptive fields grow. On the other hand, a parametric model such as LEAF is guaranteed to yield Gabor filters, hence an optimal time-frequency localization; yet, this strong inductive bias comes at the detriment of representational capacity. In this paper, we aim to overcome this dilemma by introducing a neural audio model, named multiresolution neural network (MuReNN). The key idea behind MuReNN is to train separate convolutional operators over the octave subbands of a discrete wavelet transform (DWT). Since the scale of DWT atoms grows exponentially between octaves, the receptive fields of the subsequent learnable convolutions in MuReNN are dilated accordingly. For a given real-world dataset, we fit the magnitude response of MuReNN to that of a wellestablished auditory filterbank: Gammatone for speech, CQT for music, and third-octave for urban sounds, respectively. This is a form of knowledge distillation (KD), in which the filterbank “teacher” is engineered by domain knowledge while the neural network “student” is optimized from data. We compare MuReNN to the state of the art in terms of goodness of fit after KD on a hold-out set and in terms of Heisenberg time-frequency localization. Compared to convnets and Gabor convolutions, we find that MuReNN reaches state-of-the-art performance on all three optimization problems.

Timing of dawn chorus in songbirds along an anthropization gradient @ ICCB

On the basis of a field survey conducted on a breeding bird community (37 species) in spring 2022 in France, we disentangle the relative influence of such factors of the timing of bird chorus both at the species and community levels. Human activities are thus not only driving temporal changes in different bird species but also promote a change in the temporal structure of the chorus at the whole community.

BioacAI: Understanding animal sounds with machine learning

Official website: https://bioacousticai.eu The biodiversity crisis is coming into focus. Yet, data for monitoring wild animal populations are still incomplete and uncertain. And there are still big gaps in our understanding of animal behaviour and interactions. Animals make sounds that convey so much information. How can we use this to help monitor and protect wildlife?… Continue reading BioacAI: Understanding animal sounds with machine learning

PhD offer: “Theory and implementation of multi-resolution neural networks”

The French national center for scientific research (CNRS) is hiring a PhD student as part of a three-year project on “Multi-Resolution Neural Networks” (MuReNN). MuReNN is supported by the French national funding agency (ANR), and hosted at the Laboratoire des Sciences du Numérique de Nantes (LS2N). A collaboration with the Austrian Academy of Sciences is… Continue reading PhD offer: “Theory and implementation of multi-resolution neural networks”

Réseau thématique “Capteurs en environnement” (RTCE)

Site officiel : https://www.reseau-capteurs.cnrs.fr/ Forum : https://rtce.forum.inrae.fr Le réseau a pour vocation de rassembler l’ensemble des acteurs œuvrant dans les disciplines dédiées à la mesure in natura, en environnement naturel ou semi-naturel, souvent fortement anthropisé. L’ensemble des systèmes ou écosystèmes sont considérés : atmosphère, biosphère, terre interne ou externe, lacs, océans, glaciers et calotte de glace,… Continue reading Réseau thématique “Capteurs en environnement” (RTCE)

MuReNN: Multi-Resolution Neural Networks

“Less is more”, once the foundational motto of minimalist art, is making its way into artificial intelligence. After a maximalist decade of larger computers training larger neural networks on larger datasets (2012-2022), a countertrend arises. What if human-level performance could be achieved with less computing, less memory, and less supervision? In deep learning, the research… Continue reading MuReNN: Multi-Resolution Neural Networks

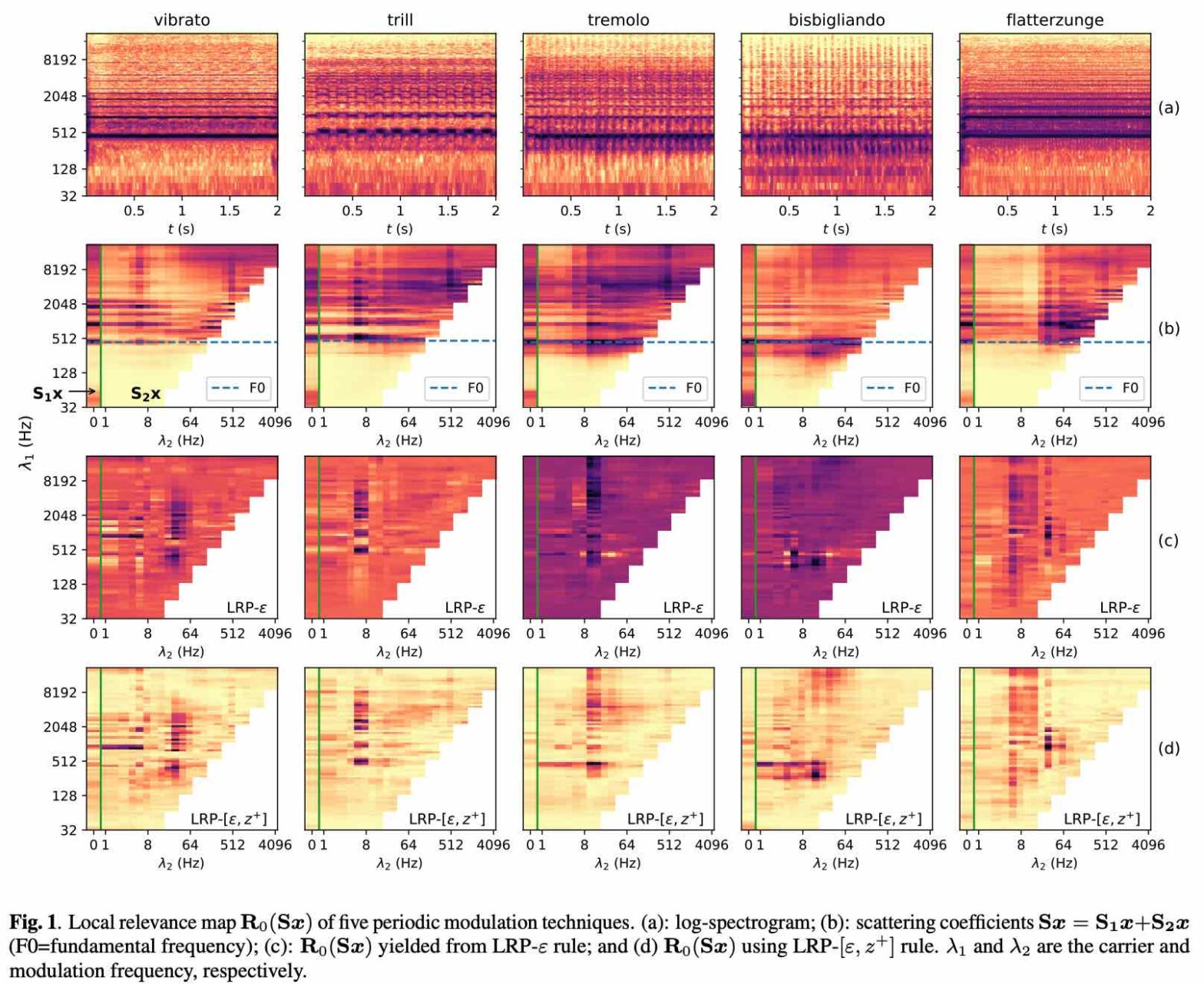

Explainable audio classification of playing techniques with layerwise relevance propagation @ IEEE ICASSP

Deep convolutional networks (convnets) in the time-frequency domain can learn an accurate and fine-grained categorization of sounds. For example, in the context of music signal analysis, this categorization may correspond to a taxonomy of playing techniques: vibrato, tremolo, trill, and so forth. However, convnets lack an explicit connection with the neurophysiological underpinnings of musical timbre perception. In this article, we propose a data-driven approach to explain audio classification in terms of physical attributes in sound production. We borrow from current literature in “explainable AI” (XAI) to study the predictions of a convnet which achieves an almost perfect score on a challenging task: i.e., the classification of five comparable real-world playing techniques from 30 instruments spanning seven octaves. Mapping the signal into the carrier-modulation domain using scattering transform, we decompose the networks’ predictions over this domain with layer-wise relevance propagation. We find that regions highly-relevant to the predictions localized around the physical attributes with which the playing techniques are performed.

L’innovation peut-elle conduire à plus de sobriété dans la musique enregistrée ?

Une table ronde sur les enjeux écologiques de la musique enregistrée, organisée par le Centre national de la musique (CNM) à l’occasion des Rencontres de l’innovation dans la musique 2023. Cette table ronde coïncide avec la sortie du recueil “Musique et données”. Avec : Modération : Emily Gonneau – Causa

Écologie de la musique numérique

Un article en langue française dans le dernier recueil du Centre national de la musique (CNM). En voici le résumé : Il est temps de renoncer à l’utopie d’une musique intégralement disponible, pour tout le monde, partout, tout de suite. Au contraire, le flux audio musical est matérialisé dans ses objets, limité dans ses architectures… Continue reading Écologie de la musique numérique