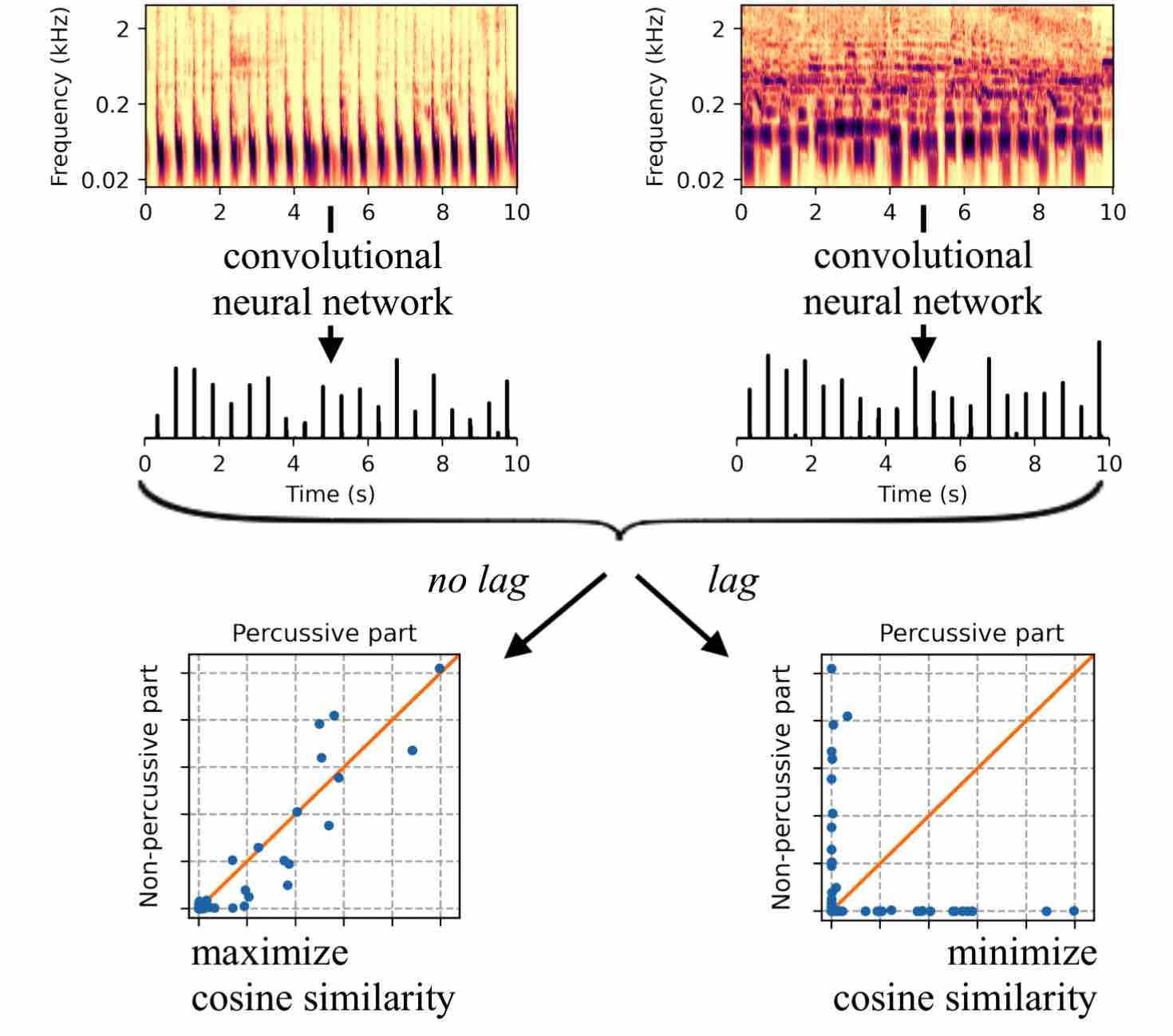

Zero-Note Samba: Self-Supervised Beat Tracking

Articles dans une revue

Auteurs : Dorian Desblancs, Vincent Lostanlen, Romain Hennequin.

Publié dans : IEEE/ACM Transactions on Audio, Speech and Language Processing

Date de publication : 2023

Blind source separationMulti-layer neural networkMusic information retrievalUnsupervised learning