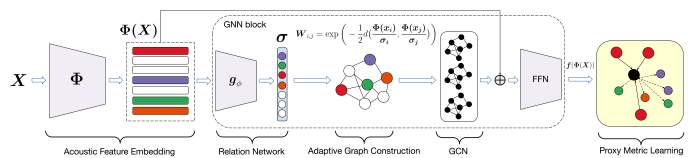

Perceptual musical similarity metric learning with graph neural networks

Communications dans un congrès

Auteurs : Cyrus Vahidi, Shubhr Singh, Emmanouil Benetos, Huy Phan, Dan Stowell, György Fazekas, Mathieu Lagrange.

Conférence : IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA 2023)

Date de publication : 2023

Auditory similarityContent-based music retrievalGraph neural networksMetric learning