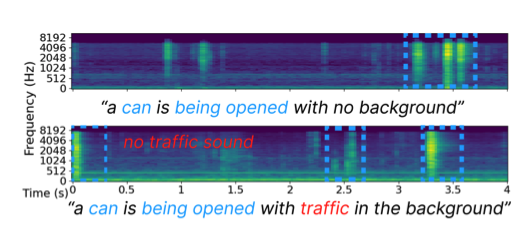

Despite significant advancements in neural text-to-audio generation, challenges persist in controllability and evaluation. This paper addresses these issues through the Sound Scene Synthesis challenge held as part of the Detection and Classification of Acoustic Scenes and Events 2024. We present an evaluation protocol combining objective metric, namely Fréchet Audio Distance, with perceptual assessments, utilizing a structured prompt format to enable diverse captions and effective evaluation. Our analysis reveals varying performance across sound categories and model architectures, with larger models generally excelling but innovative lightweight approaches also showing promise. The strong correlation between objective metrics and human ratings validates our evaluation approach. We discuss outcomes in terms of audio quality, controllability, and architectural considerations for text-to-audio synthesizers, providing direction for future research.

Tag: synthesis

Journée GdR IASIS “Synthèse audio” à l’Ircam

As part of the CNRS special interest group on signal and image processing (GdR IASIS), we are organizing a 1-day workshop on audio synthesis at Ircam on November 7th, 2024.

Dans le cadre de l’axe « Audio, Vision, Perception » du GdR IASIS, nous organisons une journée d’études dédiée à la synthèse audio. La journée se tiendra le jeudi 7 novembre 2024 à l’Ircam (laboratoire STMS), à Paris.

Content-Based Indexing for Audio and Music: From Analysis to Synthesis

Audio has long been a key component of multimedia research. As far as indexing is concerned, the research and industrial context has changed drastically in the last 20 years or so. Today, applications of audio indexing range from karaoke applications to singing voice synthesis and creative audio design. This special session aims at bringing together researchers that aim at proposing new tools or paradigms to investigate audio and music processing in the context of indexation and corpus-based generation.

Mathieu, Vincent, and Modan present at DCASE

Our group has presented two challenge tasks and two papers at the international workshop on Detection and Classification of Acoustic Scenes and Events (DCASE), held in Tampere (Finland) in September 2023.

Foley sound synthesis at the DCASE 2023 challenge

The addition of Foley sound effects during post-production is a common technique used to enhance the perceived acoustic properties of multimedia content. Traditionally, Foley sound has been produced by human Foley artists, which involves manual recording and mixing of sound. However, recent advances in sound synthesis and generative models have generated interest in machine-assisted or… Continue reading Foley sound synthesis at the DCASE 2023 challenge